Predicting Game Performance Via YouTube Sentiment

A guest post by Christian Yoder, who ran an interesting sentiment analysis experiment on video game trailer YouTube comments

One of the toughest challenges when making games is figuring out how well your game is likely to perform with players. Even if you gather market research, run playtests, and conduct surveys—at the end of the day nobody knows whether your game will pop off.

But in the post linked above, I wrote—only half-jokingly—that you sort of can predict whether or not a game is gonna bang based on its YouTube comments. If the peanut gallery is united in their love or disdain for what they’re seeing, surely that’s indicative of the sort of word-of-mouth a game will get upon launch, right?

This sent my dear friend Christian Yoder down a rabbit hole.

In the first ever guest post on Push to Talk, Yoder—a longtime games PM and former Insights Director on League of Legends—takes a crack at finding out just how far we can take the idea of predicting game performance via YouTube sentiment analysis.

I’ve always been suspicious of attempts to quantify fundamentally qualitative data, but when Yoder shared this piece with me, it got me thinking. His insight that LLMs are finally starting to get better at evaluating online comments makes sense. And I like that he ran his analysis on a bunch of smaller independent games—that choice revealed some of the limits of my original formulation. What if only big, established games reach the level of attention required to derive predictive responses from online audiences?

I’ll let Yoder take it from here.

—Rigney

Discovering whether a game (or game feature) is going to blow up is a notoriously difficult exercise. I say this as someone who has spent most of my career attempting to do just that. Last year, my friend Ryan wrote about his unconventional method of parsing YouTube comments as a way of getting signal.

The approach is, of course, largely tongue-in-cheek, but it’s an idea that I haven’t been able to get out of my head since first hearing about it. In my personal, completely anecdotal experience, it felt intuitively correct.

Is it possible that randos leaving comments on YouTube can actually tell you whether your game will do well simply from watching game footage?

I decided to find out.

Initial Look

How might we assess whether the emoji-filled, typo-ridden comments of anonymous YouTube users have any sort of predictive value?

This seemed like an obvious yet novel application for LLMs, which would be much faster and potentially more reliable than a human coder. I made the following prompt and was off to the races:

You are a bot that analyzes the sentiment of YouTube comments for video game trailers. Rate each comment as -1 (negative), 0 (neutral), or 1 (positive).

Copy/pasting thousands of YouTube comments into ChatGPT to get the results would be about as effective or fun as mowing a lawn with a pair of scissors, so the YouTube and OpenAI APIs came in handy for largely automating this process.

The basic flow goes like this: First, download all the comments from YouTube trailers of various games and (importantly) filter out comments made after the game actually launched. Next, ask ChatGPT to rate the sentiment of those comments. Finally, see how the results relate to a game’s success.

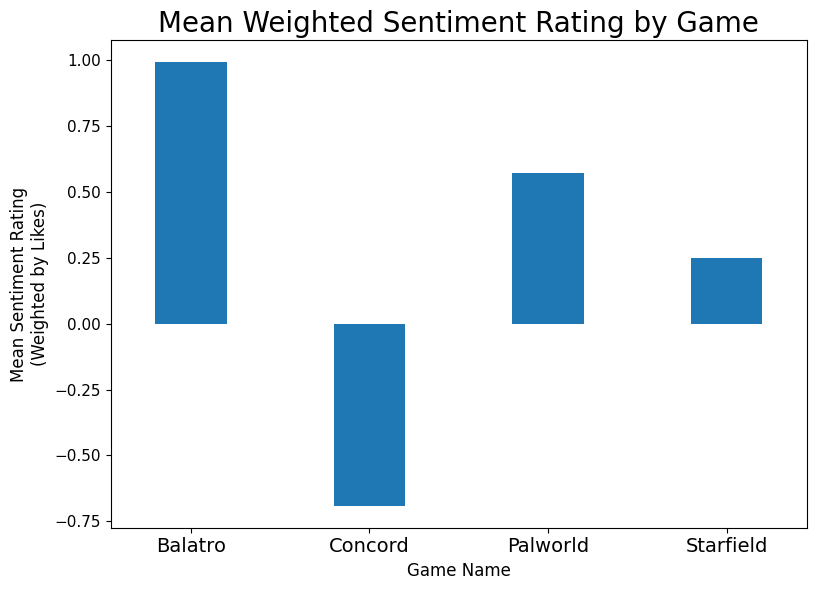

As an initial gut check, I fed tens of thousands of YouTube comments from the trailers of four of the most talked about games (for different reasons) over the last couple years into our machine. I then averaged the ratings for each game.

What came out was startling:

I hadn’t written down a hypothesis as to what the data would look like prior to visualizing it. But if I did, I don’t think this graph would be far off.

I believe the results largely track with the reception of these games on YouTube. Balatro was a GOTY nominee. Palworld was a massive hit, however much criticism it got for leaning heavily on Pokémon's IP. Starfield was solid, if not much more than a space-skinned Elder Scrolls game. Concord, of course, was a historic flop.

While the initial results were very promising, this analysis wasn’t particularly rigorous for a few reasons. First, the sample was very small. With four games, I certainly could have just gotten lucky. Second, even though I excluded comments created after the game’s launch, AAA titles like Concord and Starfield will playtest with thousands of users before launching. There will be alpha tests with more ‘pre-’ prefixes than might seem reasonable. In other words, it is often the case that many people play AAA titles before the game trailer is even launched, which could influence the results. Finally, games from established publishers, such as Bethesda (Starfield), carry expectations based on their previous titles.

I decided to rerun this analysis with a bigger sample without big studios that have the playtesting infrastructure or the existing baggage.

Full Methodology

Before going any further, it’s worth mentioning that this is far from a peer-reviewed piece of work. It is a personal side project that piqued my interest for long enough to conduct and share it. While it is probably more rigorous than assessing comments based purely on vibes, much of the work was automated and only superficially verified. It probably didn’t get the attention to detail (which is usually boring and tedious) that science demands.

With that caveat out of the way here is a bulleted list of my full approach:

Originally, 288 games were selected with release dates between September 17th and October 17th last year (dates were arbitrary) from the GameDiscoverCo Plus database. I whittled this down using the following filters:

No adult-themed games

No F2P games

All games included in the set also…

sold between 1,000 and 250,000 copies…

and had a game trailer on YouTube…

and supported the English language…

and their trailer had at least 10 comments prior to the game’s launch.

I ended up with 59 games after applying the filters. Then, I…

Used the YouTube API to search for the videoIDs of game trailers, using the following search parameter: "{game_name} steam indie game official trailer". This occasionally led to irrelevant results, so I did some hand pruning of trailers to make sure that they were correct.

Pulled all comments and replies for those videoIDs.

Used OpenAI’s GPT-4o model to analyze the comments and replies.

OpenAI was given the following prompt for each comment: "You are a bot that analyzes the sentiment of YouTube comments for video game trailers. Rate each comment as -1 (negative), 0 (neutral), or 1 (positive). You must respond with a JSON object that follows EXACTLY this structure: {\"sentiment\": -1} or {\"sentiment\": 0} or {\"sentiment\": 1}. The sentiment value must be an integer, not a string. Do not include any explanations or additional text in your response."

And the final three steps were:

Uploading the file using the OpenAI batch API, which is far cheaper if you don’t need real time data.

Taking out a couple of extreme outliers

Analyzing and graphing the data using Python, the Pandas package, and the Cursor text editor.

One final note: GameDiscoverCo, the data source used to conduct the analysis of the indie games, estimates game revenue based on reviews-to-sales ratios and soft adjustments based on things like genre and price. With that said, I believe it to be fairly accurate, certainly when making comparisons between games.

Going Broader

To get a better sample, I turned to Simon Carless’ incredible trove of Steam game data, GameDiscoverCo. After some sampling, cleaning, and pruning (detailed above), I ended up with over 18,000 comments from 59 indie games that were launched in the latter half of 2024. These games were not hits (fewer than 250,000 copies sold), but not so small as to be completely absent of data (at least 1,000 copies sold).

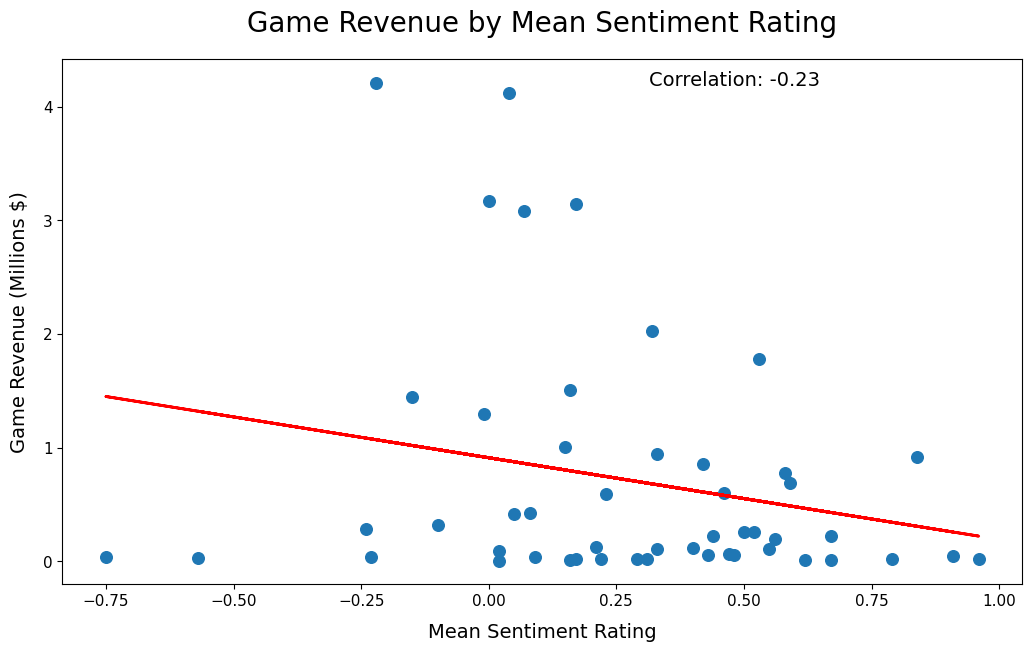

The results here turned out to be far less remarkable in some ways, but more remarkable in others.

The effect that we saw with the bigger titles completely disappeared. If anything, it seemed that the comment sentiment was negatively associated with game performance (though this relationship wasn’t strong enough to be of note).

Whenever you don’t see the results you hoped for in any sort of analysis, it’s certainly easy to be disappointed.

Reflecting on it further, I’ve come around to thinking it might be even more remarkable that opinions formed from watching game trailers don’t actually seem to matter at all.

It turns out comments like, “The 3D just ruined it honestly. Pass,” or “This definitely has to be an April fool's joke,” or a personal favorite, “Bold design choice to make everyone ugly and unpleasant to look at,” just don’t seem to have any bearing on whether the game will do well, at least when looking at smaller indie titles.

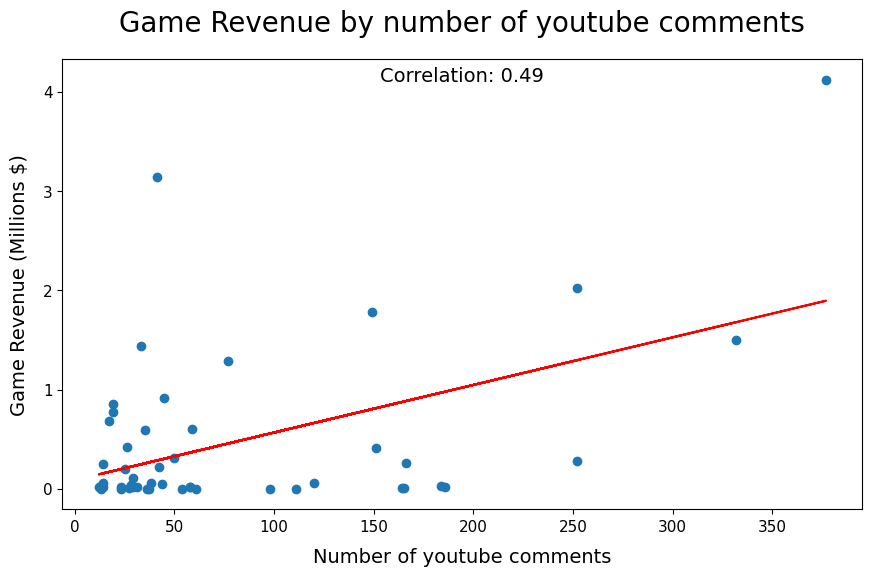

Even though sentiment didn’t seem to predict much, the number of comments left on the trailers did seem to have a fairly robust relationship with game revenue. It’s easy to see why: The number of comments is strongly associated with the number of views, which would only increase the level of awareness about a game. For an indie title jostling in an extremely crowded marketplace, every bit of awareness likely helps, even if it is the result of disgruntled comments.

Ultimately, the matter is far from settled. You could imagine how this analysis might have turned out differently by switching up my approach. A different ChatGPT prompt, a more thorough vetting of the game trailers, or a different selection of games could certainly have led to different results.

For now at least, predicting game success seems to be no less inscrutable, even when looking at YouTube comments.

Coda

I have two primary takeaways after reading Christian Yoder’s piece:

For independent games, the amount of engagement you get is probably more predictive of future success than sentiment. Awareness and interest are the primary problems for indies.

I should probably write a post about potential uses of AI tools in games marketing, even though I’d really rather keep my fingerprints off that grenade.

Please sound off in the comments on any of the above, and follow my boy Yoder on social.

—Rigney

The use of the LLM is so interesting!

Now that's an interesting use of an LLM!